The Optimization Logic That Improves Your Core Etl Performance How To Enable High Processing To Automate Data

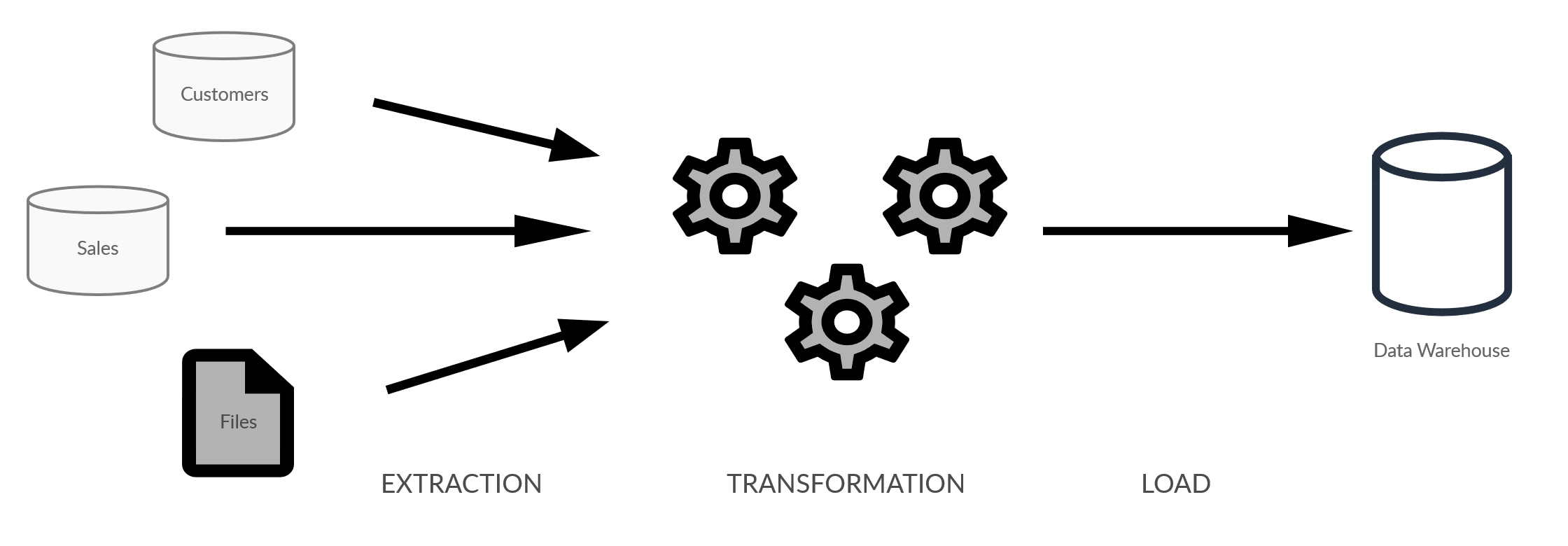

Optimizing the performance of extract, transform, load (etl) processes is crucial for efficient data integration and processing This post guides you through the following best practices for ensuring optimal, consistent runtimes for your etl processes. In this guide, we will explore various tips and strategies to improve the performance of your etl workflows

How to Enable High-Performance ETL Processing to Automate Data

By implementing these optimization techniques, you can enhance the speed, scalability, and reliability of your data pipelines, ultimately leading to more. Explore best practices for etl performance optimization to ensure smooth data processing and maximize efficiency. Tackle bottlenecks before anything else, make sure you log metrics such as time, the number of records processed, and hardware usage

- New Data Reveals Surprising Academic Gains Across Schools In Glen Burnie

- St Pauls Manor 2026 Senior Tech Integration Wins National Awards

- The Shocking Truth Behind St Pauls Admissions 2026 Sees Record International Surge

Check how many resources each part of the process takes and address the.

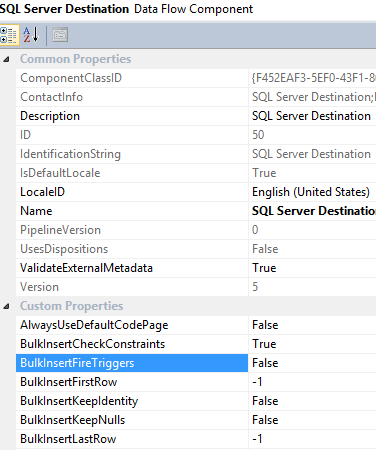

Learn actionable strategies to improve speed, resource optimization, and cost efficiency Transform etl into a strategic asset for your organization. By optimizing your transformation processes, you can drastically improve the speed and efficiency of your etl pipeline without sacrificing data quality Techniques for optimizing data transformations

Learn etl optimization techniques to improve data pipeline speed, scalability, and reliability in your analytics workflows. Continuously monitor the performance of your etl processes and identify areas for further improvement Implement a feedback loop to incorporate optimization strategies and maintain peak performance as data volumes and processing requirements evolve. Having effective retry logic and alerting is crucial

By optimizing extraction, optimizing transformation, and optimizing loading, data engineers can significantly enhance performance and scalability.

Learn how to optimize your etl processes for efficient data integration and discover strategies to improve performance and reduce costs. Etl developers in the software industry face continuous pressure to ensure that data pipelines are optimized, scalable, and robust In this comprehensive guide, we dive deep into the core aspects of etl performance optimization, offering proven strategies, best practices, and practical examples to help you excel in your role. From optimizing workflows to selecting the right tools, empower your team to create a seamless and scalable etl framework for enhanced data management and analytics.

Explore etl optimization strategies for enhanced data processing, cost efficiency, and business insights in this comprehensive guide. Remember, etl performance optimization is not a destination As the data landscape evolves, so must your etl processes A botched etl job is a ticking time bomb waiting to detonate a whirlwind of inaccuracies

Follow these 5 etl best practices to avoid a data pipeline disaster.

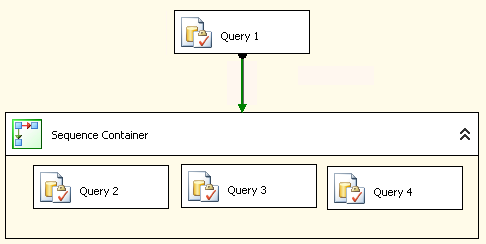

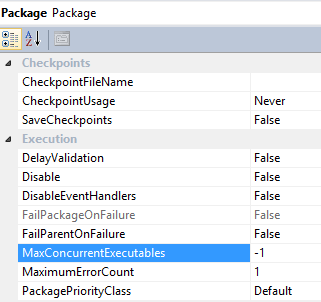

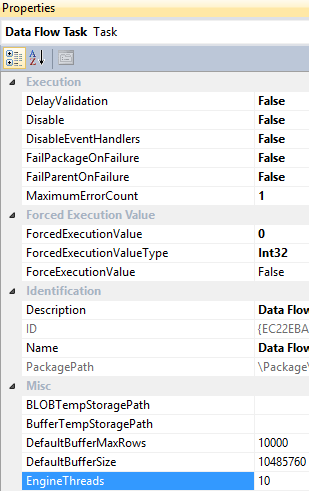

As organizations scale their data platforms, performance is no longer a luxury, it's a core requirement For teams building on databricks, achieving optimal performance means faster insights. In this dissertation work, we delve into the optimization methods of etl processes in order to minimize the execution time of etl jobs. Sql server integration services (ssis) is a powerful etl (extract, transform, load) tool widely used for data migration and integration

However, as data volumes grow and complexities increase, performance issues can emerge Optimizing ssis packages is essential to ensure they run efficiently, reduce execution time, and use resources effectively In this blog, we'll explore proven tips and. Here is a list of solutions that can help you improve etl performance and boost throughput to its highest level.

The key element to successfully tune your jobs for optimal performance is to identify and eliminate bottlenecks

The first step in the performance tuning is to identify the source of bottlenecks Learn how to improve your etl process for moving and transforming data from various sources to a target destination, by following some tips and best practices on data sources, tools, logic, code. Adhering to these guidelines minimizes errors, reduces manual effort, and maintains system consistency.